AI is taking off… to the extent that there are discussions from many think tanks of AI writing its own code and growing exponentially… an idea originally proposed by Ray Kurzweil.

This sci-fi sounding idea brings with it hope and fear for many. What role will developers have if AI can not only code the software we ourselves develop, but also its own programming?

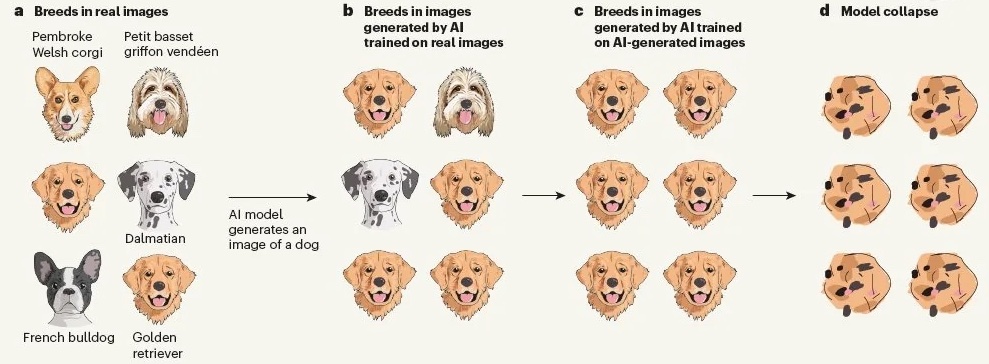

A recent paper details a phenomenon known as “model collapse”:

“A Note on Shumailov et al. (2024): `Al Models Collapse When Trained on Recursively Generated Data” (link in bottom)

The idea is that a Generative AI system, trained on synthetic data, will inevitably lose a basis in reality through the act of degradation of its outputs that it then trains on. Image attached for conceptualizing.

To anyone who develops, we know the inherent nature of feature requests… adding a feature to an existing structure. For developers with enough experience, the flow of the code emerges and much like directing water, we adapt and introduce a new flow alongside the existing flow. Layers emerge and we learn to adapt the new features to the existing ones without breaking.

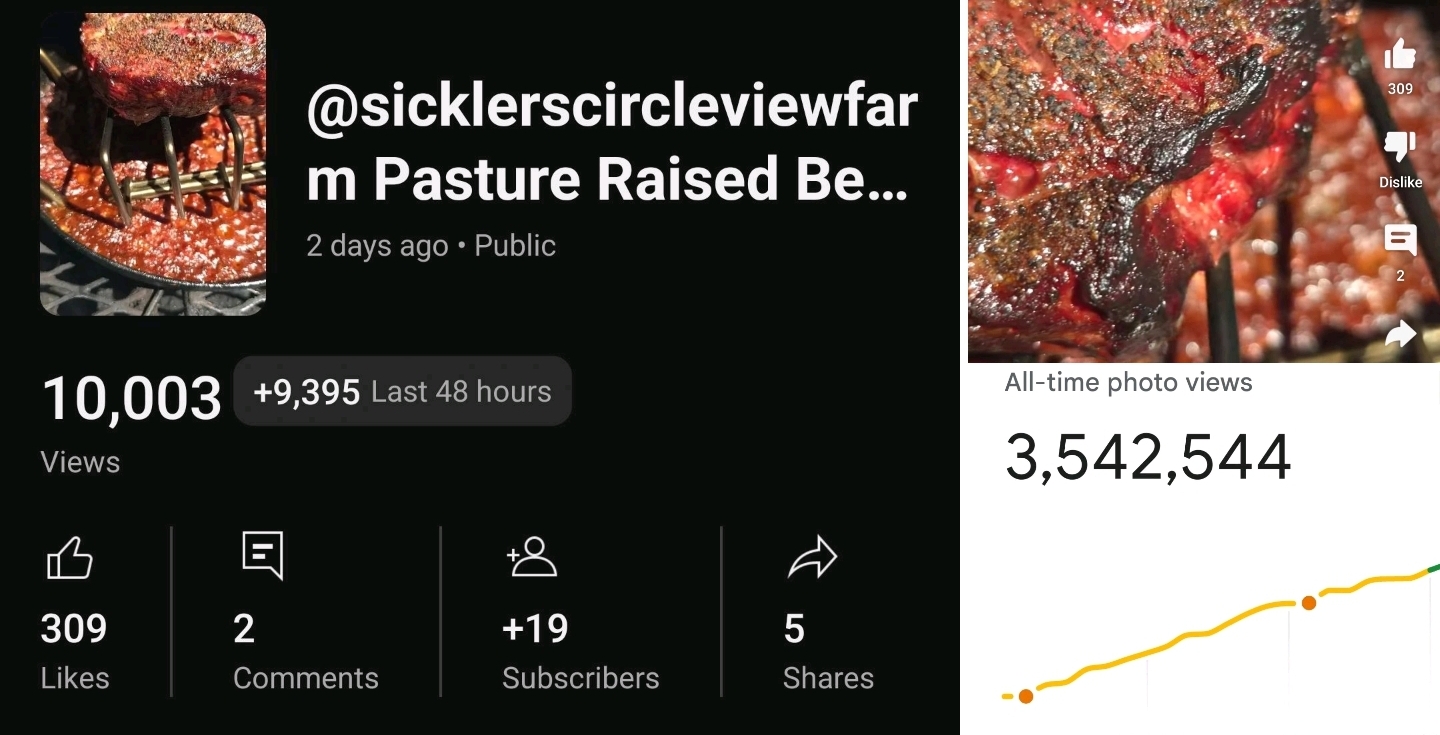

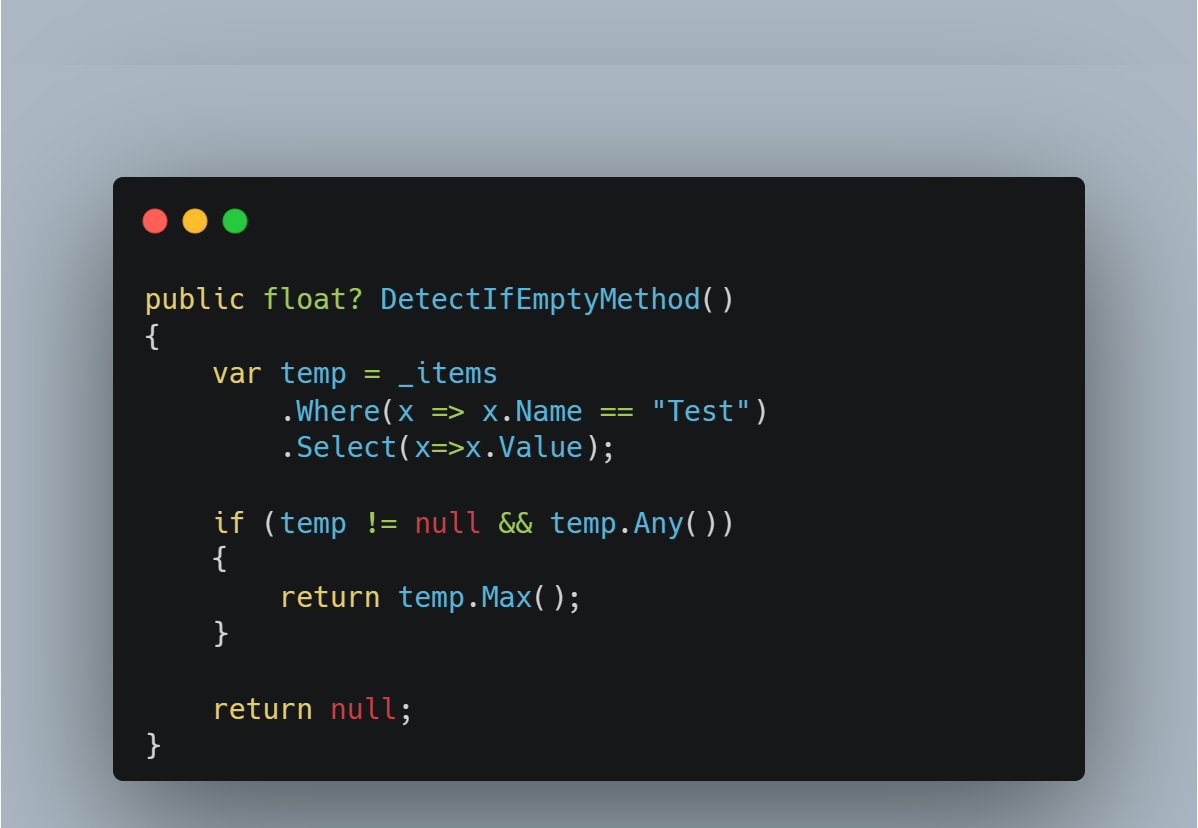

The inevitable risk of AI taking over this task without human input, is a degradation in code as AI trains itself on the code it generated to add new features. In other words, a degradation of code until the risk of error is high and the whole system breaks.

Model collapse is a feature that this paper suggests is a statistical outcome that is “unavoidable” in generative AI models (e.g. LLMs)

This suggests that developers will benefit from the current type of AI being developed, but AI will need to interact with our minds to create code that doesn’t break.

We are so quick to think our minds are only Computational units, and in so doing, we have lost a great deal of faith in what makes us human and what we are capable of. Have faith in yourself. Have hope in your humanity.